Adopting Nix

I recently had to chance to refactor the build process and CI pipeline of a couple of projects using nix. These projects were using a combination of Makefiles, Dockerfiles, and Github artifacts. The hope was that a nix-based build would solve a range of problems that I’ll describe in more detail below. I had looked at nix before and was somewhat familiar with what it does, but I had never used it in real projects. In this post I’ll describe the problems that I hoped nix nix would solve, whether it succeeded, and some of the challenges I ran into.

What is Nix? #

It’s a bit challenging to explain what nix is. It somehow refers to a language, a package manager, an immutable graph database, a build system, and also an operating system. When git became popular it was easy to explain by comparing it with existing version control systems like svn or mercurial. Nix doesn’t directly map to an existing technology. People have compared it to Docker or homebrew, but that doesn’t quite do it justice.

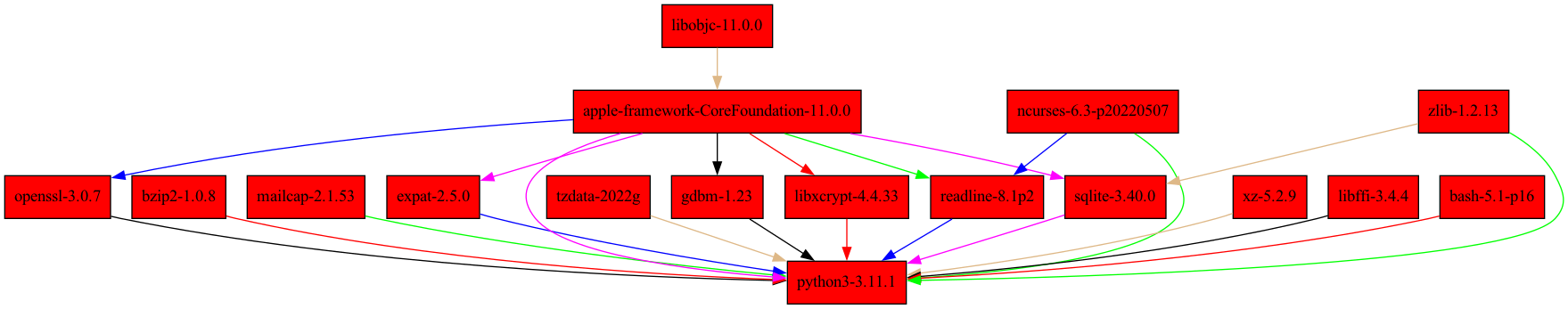

Leaving the operating system NixOS aside, one way to think of nix is as a language-specific dependency manager like cargo for Rust or npm for Node, but for any software, and with stronger functional guarantees. When you build a binary, let’s say python3.11, nix knows the full transitive dependency graph, all the way down to the low-level system libraries and compilers. Building python through nix will build all dependencies from scratch, unless they can be re-used from previous builds or through caching. For example,the dependency graph to build python3.11 on MacOS looks as follows, generated using nix-store -q --graph /nix/store/f8fdbfylqzwmil8yl1kwkbw620p49il8-python3-3.11.1/bin/python > dot -Tpng

When nix builds something, it’s stored in /nix/store, which is essentially an immutable graph database, with a hash that encodes this full dependency graph. For example, the python artifacts from above are stored in /nix/store/f8fdbfylqzwmil8yl1kwkbw620p49il8-python3-3.11.1. If any inputs dependencies (including the build script) were different, the hash would be be different too. Nix guarantees this by building software in a sandbox where only explicitly defined input dependencies are available. The build process cannot accidentally use libraries installed on your local system that are not part of the dependency graph. That can be quite inconvenient, but it’s also a huge feature.

The implications of this are fully reproducible and declarative builds which allow you to

- Copy software, like the python graph above, from one nix store to another across machines and it’s guaranteed to just work

- Install different versions of the same software, e.g. different Python versions, side by side without them getting in each other’s way

- Create sandboxed developer environments where specific versions of tools are available, without any virtualization

- Build Docker images without using Docker

- Manage an operating system in a declarative way and roll back changes when something breaks

The perhaps biggest selling point of nix is that it allows you to replace a bunch of currently separate tools and workflows with a single tool.

Let’s look at some of the problems I hoped nix would help solve. In these examples I use flakes, which are a relatively new feature of nix.

Reproducible CI pipelines #

The CI pipeline, Github Actions in our case, was not runnable locally. This means doing git commit -am "CI fix" trial and error pushing where each iteration may take several minutes.

Instead of running project-specific code in Github Actions, the goal was run nix build and nix flake check in CI. These are the exact same commands one can run locally, so they should work the same way. If it builds locally, it should build in CI. The Github Actions workflow then reduces to something like this (in reality it’s more complicated due to caching and authentication):

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

# caching stuff

- run: nix build -L .#outputName

- run: flake check -L .#outputName

# caching stuff

For a developer on a Linux-based machine the pipeline is indeed perfectly reproducible locally since CI uses same architecture, and thus the same dependency graph. But MacOS has a different set of dependencies. If you want to support building on both platforms, you must account for the differences in your nix files, e.g.

buildInputs = [

# Linux build inputs

] ++ pkgs.lib.optionals pkgs.stdenv.isDarwin [

# additional darwin specific inputs

pkgs.libiconv

];

This is understandable from an architecture perspective and not a problem with nix. The real problem is that it’s difficult to test the Linux build from a MacOS machine. The most reliable option seems to be setting up remote builder. This doesn’t necessarily need to be a remote machine. It could be local VM or Docker container using nix-docker. While this works, setting it up and managing it manually can be a bit of work, and documentation is lacking.

It looks like there exists a new nixpkgs#darwin.builder now. This seems easier to set up, but I haven’t had the chance to try it. There are a few subtle differences between how nix works on MacOS vs. Linux, but more on those below.

Fast and easy Docker image builds #

Docker images are slow to build in CI. When the Docker build cache is busted, it may take 10-15 minutes. Dockerfiles are simple to write initially, but they can become complex and difficult to maintain once you optimize caching layers to avoid full rebuilds.

Nix can build Docker images without needing to run Docker. This is possible because the community has written utilities that directly generate images adhering to the Docker Image Specification. Because nix already knows the build process and dependency graph of the binary you want to run, the generated image layers are minimal. Images are fast to build because nix re-uses its cached work from /nix/store and just needs to copy stuff. Generating a Docker images after you’ve already run nix build (e.g. for testing) only takes a few seconds. By using buildLayeredImage instead of buildImage you can generate layers that are re-used when pushing or pulling an image.

Adding an output for a Docker images to a nix flake is only a few lines of code and may look something like this:

buildLayeredImage {

name = "app-name";

tag = "latest";

# appPackage is the derivation for our app

# We add utilities that are useful to have inside the container

contents = [appPackage pkgs.bash pkgs.coreutils pkgs.dockerTools.caCertificates];

}

You can find more examples here. In Github Actions, the Docker images can be built, loaded and pushed as follows:

- name: Load and push docker image

run: |

# Build the image using nix

nix build -L -o docker_image .#dockerImageOutput

# Load the image into Docker

docker load < docker_image

# Add tags

docker tag ...

docker push --all-tags your.registry.com

Code duplication #

Makefiles, Dockerfiles, and CI pipelines often end up having significant code overlap. Some projects require a multi-step build process, so it makes sense to use a Makefile to streamline it. The Dockerfile often uses code that’s quite similar to the Makefile, but with a docker-specific changes and caching optimizations, which is why it’s not always possible to re-use the Makefile. Some of the same code may also present in the CI pipelines to run automated tests.

With nix, once the .nix file is written, the nix build and nix flake check can replace all of the above:

make targetis replaced bynix build .#target- Dockerfiles are replaced by

nix build .#dockerTargetas described in the Docker section above - CI tests are replaced by

nix flake checkwhich re-uses the nix build and runs additional test and lint commands.

Sharing artifacts #

In our case, we had a Rust binary that depends on a shared library linked with bindgen. The shared library is the output of a Go project living in a separate repository. This dependency shows up in multiple places: Developers need the shared library for their architecture (MacOS or Linux) to compile and run locally. Docker images must contain the shared library built for Linux. CI needs the shared library to run tests.

Originally, we used Github artifacts to publish the shared library for multiple architectures. This meant CI and the Dockerfile must make authenticated HTTP requests to Github to fetch the right version of the shared library. This requires a bunch of boilerplate code that must be maintained. Developers must either download the right version and architecture of the library manually, or build it themselves locally.

Nix, in particular flakes, removes much of this complexity. With a flake for both the shared library and Rust project it’s easy to declare this dependency and let nix handle the rest. When the Rust binary is built, nix will automatically fetch the flake and build the shared library as a dependency, whether locally or in CI. Using cachix it’s possible to avoid re-building the shared library completely. It can be built once in Github CI and uploaded to the cache. Any project that depends on it will fetch it from there.

Implicit dependencies #

We’ve also run into issues with failing Docker builds due to shared libraries being compiled with a slightly different versions of glibc in Github Actions using Ubuntu 20.04 vs. 22.04. This is exactly the problem that Nix solves. Nix doesn’t care what system libraries are present since the sandboxed build process doesn’t allow the usage of an implicit system-level glibc. If it works on one machine, it’s guaranteed to work on another of the same architecture.

The Bad #

Adopting nix comes with some challenges. Many of them are related to (the lack of) documentation and tooling.

The Nix Language #

The Nix language feels a bit like taking a purely functional language Haskell, removing its beautiful type system, and then mixing in some Javascript. It’s not pretty, and type-checking it doesn’t seem easy. It reminds me a bit of writing jsonnet, but with more complexity due to the bigger scope in functionality. Part of it is the language itself, but the bigger problem is the lack of tooling and editor integration. There are a few early-stage nix language servers such as rnix-lsp and nil which I hope will make the experience smoother in the future.

A related issue is that people have built complex abstractions, such as nixpkgs, on top of nix. These come with their own libraries and best practices. So you’re not just learning the nix language, but also these opinionated frameworks. And it’s often difficult to tell which is which.

Lack of Documentation and Examples #

Rust has perhaps one of the best learning resources in the form of The Book. I believe this is very close to what the ideal looks like. A single continuously-updated ground source of truth that covers all important concepts minus the advanced use cases.

Nix doesn’t have anything like that. Leaving the OS aside, The official manuals for nix and nixpkgs are a strange combination of recipes and manual. They are also outdated and difficult to navigate. The best resources are not found on the official site, but scattered across the web in various blogs and Github repositories.

Because Nix has been around for while (it was first published in 2003), most of the content you find online is outdated. This isn’t nix’s fault. Search engines are just bad at temporal relevance. In my experience, the best resources for adopting Nix are actively maintained examples. Look for packages similar to what you need in the nixpkgs repo, or searching Github for .nix files with language-specific keywords. While nixpkgs is useful, it uses a special structure and layers of abstraction that you must understand first. And if you use flakes, which I think solves a lot of issues, understanding nixpkgs becomes less relevant.

As a first step, I recommend going through nix-pills. This won’t quite teach you about all the current best practices, but I think it’s still the best resource for understanding what nix does at the base level.

Flake Adoption #

Flakes are new way to declare input dependencies and outputs in Nix. In a way, they compete with nixpkgs in that they allow each project to publish their own flake instead of contributing to a big monorepo. They can of course still be integrated with nixpkgs to have a central package repository, but it would likely look a bit different from what it is today.

Flakes are not yet enabled by default, and the community seems somewhat split about their adoption. There exists reasonable arguments on both sides. However, a large part of the ecosystem is building tooling around flakes and recommends using them. Looking at the most active nix community projects, most of them seem to be using flakes. Personally I think that flakes are simpler and more intuitive than the non-flake approach. They are also a bit similar to how package managers like Cargo declare dependencies, which makes it easier for newcomers to adopt nix and feel at home.

This split means that documentation is split as well, making the problem worse. You must know whether a piece of documentation is talking about flakes or not. Almost none of the official documentation or examples mention flakes, so you wouldn’t even come across the concept from reading the official docs.

Integration with package managers is early #

I wasn’t sure whether it’s fair to include this point because it’s amazing that package manager integration exists at all.

Most languages already have a tool for dependency management, e.g. cargo for Rust, pip for Python, or built-in modules for Go. By default, nix doesn’t know about these tools, but dependencies must be shipped to the nix build process as explicit inputs somehow. You could do this manually, e.g. by making a vendor/ directory part of the input, but tools like crane (or cargo2nix), pip2nix, and gomod2nix automate the process, and in some cases also optimize caching.

These tools are great, but many of them are still early, and you will probably run into bugs or missing features. I was unable to use gomod2nix due to this issue and also ran into a few issues with Cargo integration. Contributing to these tools is a good opportunity to get involved in the community. Otherwise you may have to hack your way around some edge cases or fall back on a more manual build process.

MacOS support #

Nix works okay on MacOS, but it still has important limitations and the experience isn’t as smooth as it is on Linux. The difference in sandboxing support for MacOS in particular can be annoying. Remote builders, as mentioned earlier, are a workaround, but they are painful to set up.